Deep Latent-Variable Models

for Natural Language

Yoon Kim, Sam Wiseman, Alexander Rush

EMNLP 2018

Tutorial Slides: PDF

Live Questions: Anonymous | Tweet

Tutorial Code (Pytorch, Pyro): Notebook

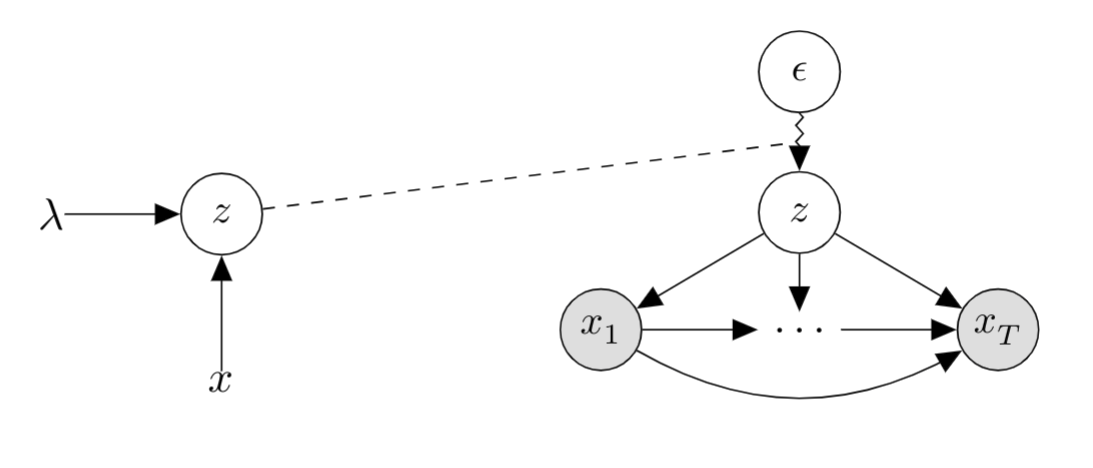

This tutorial covers deep latent variable models both in the case where exact inference over the latent variables is tractable and when it is not. The former case includes neural extensions of unsupervised tagging and parsing models. Our discussion of the latter case, where inference cannot be performed tractably, will restrict itself to continuous latent variables. In particular, we will discuss recent developments both in neural variational inference (e.g., relating to Variational Auto-encoders). We will highlight the challenges of applying these families of methods to NLP problems, and discuss recent successes and best practices.